Google Research Titans Reinvents Transformers in Artificial Intelligence

In the world of artificial intelligence, language models such as those based on Transformers have revolutionized the way long sequences of data are processed. However, these models face a major problem: as the amount of data they process increases, memory and computation time requirements grow uncontrollably, making their use difficult in tasks such as language modeling, video analysis, and time series predictions. To solve this challenge, Google AI Research has introduced Titans, a new architecture that promises to be a revolutionary change in the field.

One of the most interesting aspects of Titans is how it tries to replicate something that humans do naturally: being surprised when we find something new or unexpected.

In technical terms, Titans' memory system has the ability to identify "surprising" or unusual patterns in the data. These patterns are marked and stored in its long-term memory, allowing it to learn adaptively even during test time. It's as if the model is "paying special attention" to anything that doesn't fit with what it already knows.

For example: Imagine you are watching a series of numbers that follow a clear pattern, like 1, 2, 3, 4, 5. If suddenly a 99 appears, it would immediately catch your attention. For Titans, this 99 would be a surprising data point, and it would store it in its memory to understand why it occurred and how it might relate to future data.

Why is this capability important?

Real-time adaptation: Unlike traditional models, which need to be retrained to incorporate new information, Titans can adjust on the fly.

Better understanding of complex contexts: This approach allows it to handle data that does not follow strict patterns, such as human language or sales fluctuations.

Memory efficiency: Instead of storing everything, like other models do, Titans focuses on what is relevant or surprising, saving resources.

This "surprise" is a way of emulating how humans learn from our experiences, making Titans not only more efficient, but also more intelligent and human-like in its behavior. It is a big step towards artificial intelligence systems that understand and react more naturally to the world around them.

What makes Titans different?

The main innovation of Titans is its focus on memory. This model combines two types of memory:

- Short-term memory: Uses a traditional attention mechanism to handle immediate dependencies.

- Long-term memory: Integrates a neural memory module that stores historical information and allows for processing of extremely long contexts, even over 2 million tokens.

Thanks to this combination, Titans manages to maintain a balance between efficiency and learning capacity.

Three variants for different needs

Titans includes three main variants, each designed to integrate memory in a different way:

- MAC: Excellent for handling long dependencies and detecting complex patterns in large volumes of data.

- MAG: Similar to MAC, but with a more optimized approach for specific tasks.

- MAL: Although less powerful than the previous ones, it outperforms many existing hybrid models.

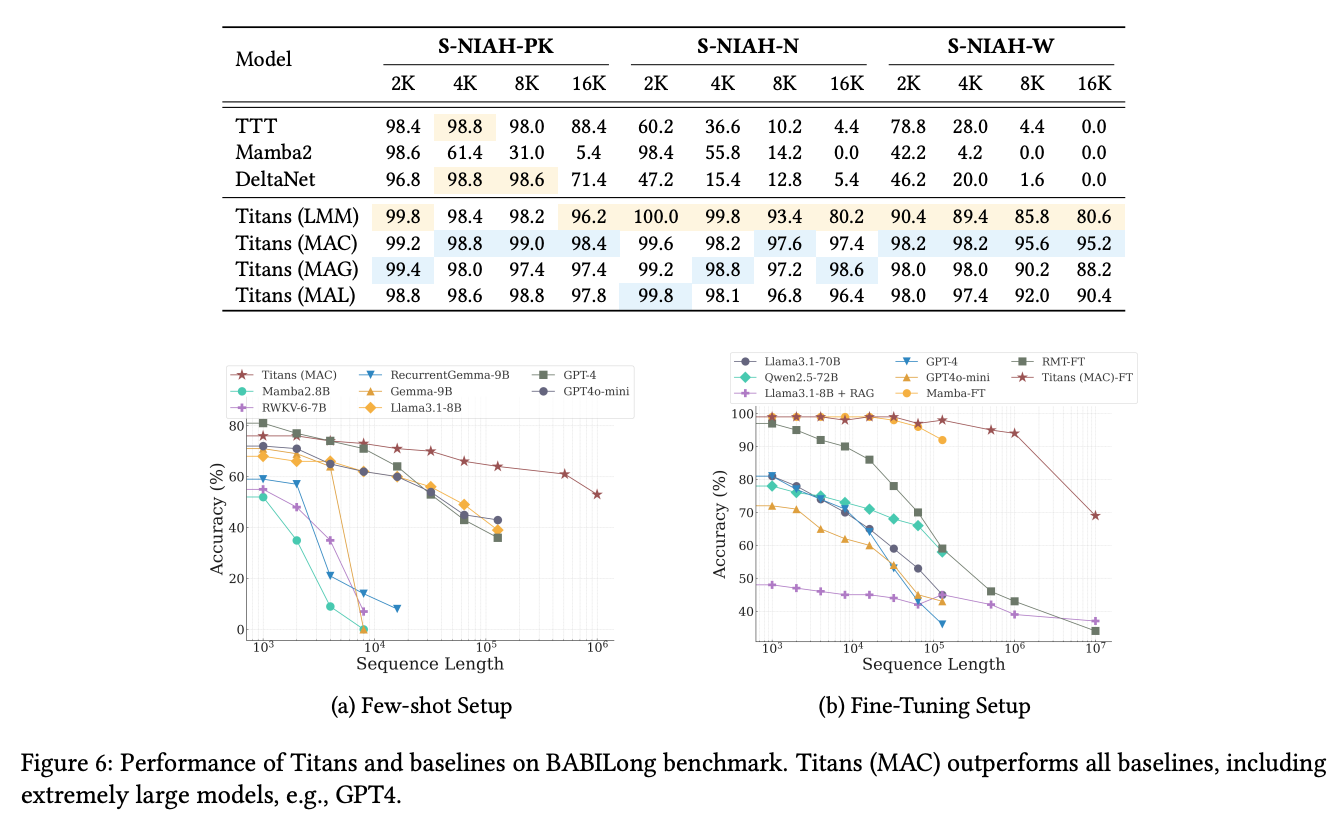

These variants stand out for their performance in complex tasks such as "finding a needle in a haystack", efficiently processing sequences of between 2,000 and 16,000 tokens.

Technical innovations

Titans' design includes technical improvements that make it stand out:

- Residual connections: Facilitate the flow of information in the model.

- SiLU activation: Improves accuracy in data processing.

- 1D separable convolutional layers: Increase efficiency without sacrificing quality.

- Advanced normalization: Allows for more efficient handling of queries and keys in memory.

These features allow Titans not only to process large amounts of data, but to do so with greater accuracy and less resource consumption.

Why does Titans matter?

Titans represents a major step forward in artificial intelligence. Its ability to handle extensive contexts opens up new possibilities in areas such as:

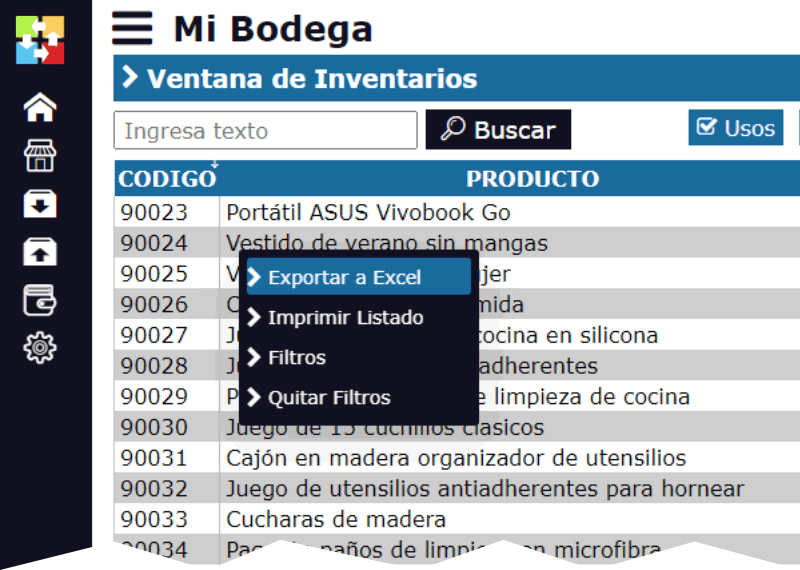

- Historical data analysis: For example, in sales or inventories.

- Trend prediction: In sectors such as finance or marketing.

- Natural language processing: For smarter chatbots and more accurate automatic translations.

In addition, Titans' architecture demonstrates that it is possible to overcome the traditional limitations of Transformer-based models, paving the way for new applications.

Conclusion

Google AI Research has presented an innovative solution to one of the biggest challenges in sequence modeling: handling long contexts. With Titans, an architecture capable of memorizing, learning, and adapting in real-time is introduced, something that promises to transform how machine learning models are developed and used in the future.

While Titans is an impressive technical achievement, it also reminds us that technology continues to evolve to face the most complex challenges. What other wonders will the future of artificial intelligence bring? Only time will tell.